Классификация настроений с использованием BERT

BERT ( двунаправленное представление для преобразователей) был предложен исследователями языка Google AI в 2018 году. Хотя основной целью этого было улучшение понимания смысла запросов, связанных с поиском Google, BERT становится одной из наиболее важных и законченных архитектур. для решения различных задач на естественном языке сформировав состояние современных результатов по Предложении парного классификации задачи, вопрос-ответ задача и т.д. для получения более подробной информации по архитектуре , пожалуйста , смотрите на этой статье

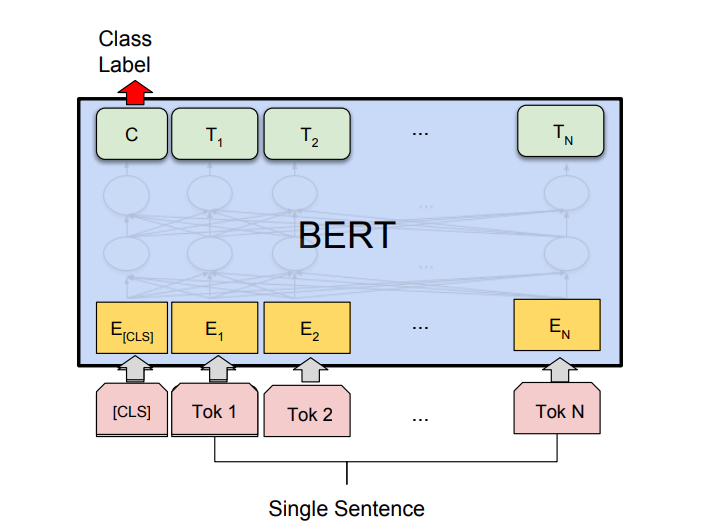

Архитектура:

Одна из наиболее важных особенностей BERT заключается в том, что его адаптивность к выполнению различных задач НЛП с высочайшей точностью (аналогично переносному обучению, которое мы использовали в компьютерном зрении ). Для этого в документе также предложена архитектура различных задач. В этом посте мы будем использовать архитектуру BERT для задач классификации отдельных предложений, в частности архитектуру, используемую для задачи двоичной классификации CoLA (Corpus of Linguistic Acceptability). В предыдущем посте о BERT мы подробно обсудили архитектуру BERT, но давайте вспомним некоторые из ее важных деталей:

BERT предлагает две версии:

- BERT (BASE): 12 уровней стека кодировщика с 12 двунаправленными головками самовнимания и 768 скрытыми блоками.

- BERT (БОЛЬШОЙ): 24 уровня стека кодировщика с 24 двунаправленными головками самовнимания и 1024 скрытыми блоками.

Для реализации TensorFlow Google предоставил две версии BERT BASE и BERT LARGE: Uncased и Cased. В версии без регистра буквы в нижнем регистре перед токенизацией WordPiece.

Implementation:

- First, we need to clone the GitHub repo to BERT to make the setup easier.

Code:

! git clone https://github.com / google-research / bert.git |

Cloning into "bert"... remote: Enumerating objects: 340, done. remote: Total 340 (delta 0), reused 0 (delta 0), pack-reused 340 Receiving objects: 100% (340/340), 317.20 KiB | 584.00 KiB/s, done. Resolving deltas: 100% (185/185), done.

- Now, we need to download the BERTBASE model using the following link and unzip it into the working directory ( or the desired location).

Code:

# Download BERT BASE model from tF hub ! wget https://storage.googleapis.com / bert_models / 2018_10_18 / uncased_L-12_H-768_A-12.zip ! unzip uncased_L-12_H-768_A-12.zip |

Archive: uncased_L-12_H-768_A-12.zip creating: uncased_L-12_H-768_A-12/ inflating: uncased_L-12_H-768_A-12/bert_model.ckpt.meta inflating: uncased_L-12_H-768_A-12/bert_model.ckpt.data-00000-of-00001 inflating: uncased_L-12_H-768_A-12/vocab.txt inflating: uncased_L-12_H-768_A-12/bert_model.ckpt.index inflating: uncased_L-12_H-768_A-12/bert_config.json

- We will be using the TensorFlow 1x version. In Google colab there is a magic function called tensorflow_version that can switch different versions.

Code:

% tensorflow_version 1.x |

TensorFlow 1.x selected.

- Now, we will import modules necessary for running this project, we will be using NumPy, scikit-learn and Keras from TensorFlow inbuilt modules. These are already preinstalled in colab, make sure to install these in your environment.

Code:

import osimport reimport numpy as npimport pandas as pdfrom sklearn.preprocessing import LabelEncoderfrom sklearn.model_selection import train_test_splitimport tensorflow as tffrom tensorflow import kerasimport csvfrom sklearn import metrics |

- Now we will load IMDB sentiments datasets and do some preprocessing before training. For loading the IMDB dataset from TensorFlow Hub, we will follow this tutorial.

Code:

# load data from positive and negative directories and a columns that takes there# positive and negative labeldef load_directory_data(directory): data = {} data["sentence"] = [] data["sentiment"] = [] for file_path in os.listdir(directory): with tf.gfile.GFile(os.path.join(directory, file_path), "r") as f: data["sentence"].append(f.read()) data["sentiment"].append(re.match("d+_(d+).txt", file_path).group(1)) return pd.DataFrame.from_dict(data) # Merge positive and negative examples, add a polarity column and shuffle.def load_dataset(directory): pos_df = load_directory_data(os.path.join(directory, "pos")) neg_df = load_directory_data(os.path.join(directory, "neg")) pos_df["polarity"] = 1 neg_df["polarity"] = 0 return pd.concat([pos_df, neg_df]).sample(frac = 1).reset_index(drop = True) # Download and process the dataset files.def download_and_load_datasets(force_download = False): dataset = tf.keras.utils.get_file( fname ="aclImdb.tar.gz", extract = True) train_df = load_dataset(os.path.join(os.path.dirname(dataset), "aclImdb", "train")) test_df = load_dataset(os.path.join(os.path.dirname(dataset), "aclImdb", "test")) return train_df, test_dftrain, test = download_and_load_datasets()train.shape, test.shape |

Downloading data from http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz 84131840/84125825 [==============================] - 8s 0us/step ((25000, 3), (25000, 3))

- This dataset contains 50k reviews 25k for each training and test, we will sample 5k reviews from each test and train. Also, both test and train dataset contains 3 columns whose list is given below

Code:

# sample 5k datapoints for both train and testtrain = train.sample(5000)test = test.sample(5000)# List columns of train and test datatrain.columns, test.columns |

(Index(["sentence", "sentiment", "polarity"], dtype="object"), Index(["sentence", "sentiment", "polarity"], dtype="object"))

- Now, we need to convert the specific format that is required by the BERT model to train and predict, for that we will use pandas dataframe. Below are the columns required in BERT training and test format:

- GUID: An id for the row. Required for both train and test data

- Class label.: A value of 0 or 1 depending on positive and negative sentiment.

- alpha: This is a dummy column for text classification but is expected by BERT during training.

- text: The review text of the data point which needed to be classified. Obviously required for both training and test

Code:

# code# Convert training data into BERT formattrain_bert = pd.DataFrame({ "guid": range(len(train)), "label":train["polarity"], "alpha": ["a"]*train.shape[0], "text": train["sentence"].replace(r"

", "", regex = True)}) train_bert.head()print("-----")# convert test data into bert formatbert_test = pd.DataFrame({ "id":range(len(test)), "text": test["sentence"].replace(r"

", " ", regex = True)})bert_test.head() |

guid label alpha text 14930 0 1 a William Hurt may not be an American matinee id... 1445 1 1 a Rock solid giallo from a master filmmaker of t... 16943 2 1 a This movie surprised me. Some things were "cli... 6391 3 1 a This film may seem dated today, but remember t... 4526 4 0 a The Twilight Zone has achieved a certain mytho... ----- guid text 20010 0 One of Alfred Hitchcock"s three greatest films... 16132 1 Hitchcock once gave an interview where he said... 24947 2 I had nothing to do before going out one night... 5471 3 tell you what that was excellent. Dylan Moran ... 21075 4 I watched this show until my puberty but still...

- Now, we split the data into three parts: train, dev, and test and save it into tsv file save it into a folder (here “IMDB Dataset”). This is because run classifier file requires dataset in tsv format.

Code:

# split data into train and validation setbert_train, bert_val = train_test_split(train_bert, test_size = 0.1)# save train, validation and testfile to afolderbert_train.to_csv("bert / IMDB_dataset / train.tsv", sep =" ", index = False, header = False)bert_val.to_csv("bert / IMDB_dataset / dev.tsv", sep =" ", index = False, header = False)bert_test.to_csv("bert / IMDB_dataset / test.tsv", sep =" ", index = False, header = True) |

- In this step, we train the model using the following command, for executing bash commands on colab, we use ! sign in front of the command. The run_classifier file trains the model with the help of given command. Due to time and resource constraints, we will run it only on 3 epochs.

Code:

# Most of the arguments hereare self-explanatory but some aguments needs to be explained:# task name:We have discussed this above .Here we need toperform binary classification that why we use cola# vocab file : A vocab file (vocab.txt) to map WordPiece to word id.# init checkpoint: A tensorflow checkpoint required. Here we used downlaoded bert.# max_seq_length :caps the maximunumber of words to each reviews# bert_config_file: file contains hyperparameter settings ! python bert / run_classifier.py --task_name = cola --do_train = true --do_eval = true --data_dir =/content / bert / IMDB_dataset --vocab_file =/content / uncased_L-12_H-768_A-12 / vocab.txt--bert_config_file =/content / uncased_L-12_H-768_A-12 / bert_config.json --init_checkpoint =/content / uncased_L-12_H-768_A-12 / bert_model.ckpt --max_seq_length = 64 --train_batch_size = 8 --learning_rate = 2e-5 --num_train_epochs = 3.0 --output_dir =/content / bert_output/ --do_lower_case = True--save_checkpoints_steps 10000 |

# Last few lines INFO:tensorflow:***** Eval results ***** I0713 06:06:28.966619 139722620139392 run_classifier.py:923] ***** Eval results ***** INFO:tensorflow: eval_accuracy = 0.796 I0713 06:06:28.966814 139722620139392 run_classifier.py:925] eval_accuracy = 0.796 INFO:tensorflow: eval_loss = 0.95403963 I0713 06:06:28.967138 139722620139392 run_classifier.py:925] eval_loss = 0.95403963 INFO:tensorflow: global_step = 1687 I0713 06:06:28.967317 139722620139392 run_classifier.py:925] global_step = 1687 INFO:tensorflow: loss = 0.95741796 I0713 06:06:28.967507 139722620139392 run_classifier.py:925] loss = 0.95741796

- Now we will use test data to evaluate our model with the following bash script. This script saves the predictions into a tsv file.

Code:

# code to predict bert on test.tsv# here we use saved training checkpoint as initial model ! python bert / run_classifier.py--task_name = cola --do_predict = true --data_dir =/content / bert / IMDB_dataset --vocab_file =/content / uncased_L-12_H-768_A-12 / vocab.txt --bert_config_file =/content / uncased_L-12_H-768_A-12 / bert_config.json --init_checkpoint =/content / bert_output / model.ckpt-0 --max_seq_length = 128 --output_dir =/content / bert_output/ |

INFO:tensorflow:Restoring parameters from /content/bert_output/model.ckpt-1687 I0713 06:08:22.372014 140390020667264 saver.py:1284] Restoring parameters from /content/bert_output/model.ckpt-1687 INFO:tensorflow:Running local_init_op. I0713 06:08:23.801442 140390020667264 session_manager.py:500] Running local_init_op. INFO:tensorflow:Done running local_init_op. I0713 06:08:23.859703 140390020667264 session_manager.py:502] Done running local_init_op. 2020-07-13 06:08:24.453814: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10 INFO:tensorflow:prediction_loop marked as finished I0713 06:10:02.280455 140390020667264 error_handling.py:101] prediction_loop marked as finished INFO:tensorflow:prediction_loop marked as finished I0713 06:10:02.280870 140390020667264 error_handling.py:101] prediction_loop marked as finished

- The code below takes maximum prediction for each row of test data and store it into a list.

Code:

# codeimport csvlabel_results =[]with open("/content / bert_output / test_results.tsv") as file: rows = csv.reader(file, delimiter =" ") for row in rows: data_1 =[float(i) for i in row] label_results.append(data_1.index(max(data_1))) |

- The code below calculates accuracy and F1-score.

Code:

print("Accuracy", metrics.accuracy_score(test["polarity"], label_results))print("F1-Score", metrics.f1_score(test["polarity"], label_results)) |

Accuracy 0.8548 F1-Score 0.8496894409937888

- We have achieved 85% accuracy and F1-score on the IMDB reviews dataset while training BERT (BASE) just for 3 epochs which is quite a good result. Training on more epochs will certainly improve the accuracy.

References:

- BERT paper

- Google BERT repo

- MC.ai BERT text classification

Attention geek! Strengthen your foundations with the Python Programming Foundation Course and learn the basics.

To begin with, your interview preparations Enhance your Data Structures concepts with the Python DS Course. And to begin with your Machine Learning Journey, join the Machine Learning – Basic Level Course